The Future of Data: Top Trends in the Data Quality Management Market

The future of the Data Quality Management Market Trends is pointing towards a more automated, more predictive, and more deeply embedded approach that makes data quality a continuous and proactive process rather than a reactive, manual effort. As the industry continues its impressive growth towards a projected USD 10.69 billion valuation by 2035—a journey propelled by a solid 9.22% CAGR from 2025 to 2035—several key trends are emerging that will define the next generation of these essential data platforms. These trends are focused on leveraging artificial intelligence to automate discovery and remediation, on applying data quality principles to the new world of data observability, and on making data quality a more collaborative, self-service function for the entire business.

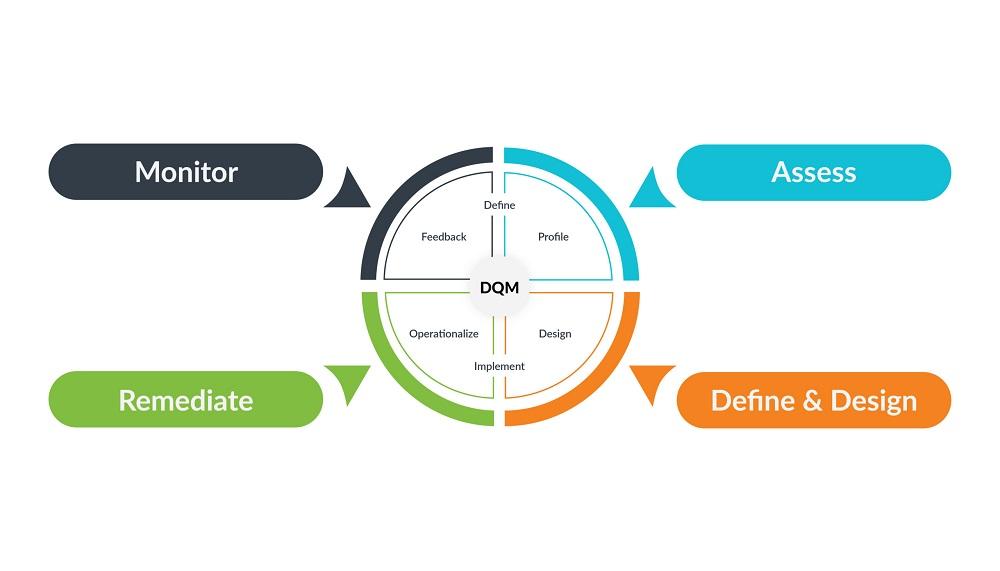

The single most significant and impactful trend is the deep integration of Artificial Intelligence (AI) and machine learning into the core of the DQM platforms. AI is being used to automate many of the most time-consuming and manual tasks in data quality management. For example, machine learning algorithms can now automatically scan a dataset and discover its structure, identify potential quality issues, and even suggest the appropriate data quality rules to apply, a process that used to require a skilled data analyst. AI is also being used to power more sophisticated data matching and de-duplication, and to detect complex, anomalous data patterns that might indicate a quality issue, making the entire process more intelligent and efficient.

Another major trend is the evolution from traditional data quality to the broader concept of "data observability." Data quality has traditionally focused on the static quality of the data itself. Data observability is a more holistic and dynamic approach that focuses on monitoring the health of the entire data pipeline. It involves not just checking the data for errors, but also monitoring the freshness of the data, the volume of the data, and the structure (schema) of the data as it moves through different systems. This allows a data engineering team to get an early warning of any problem in the data pipeline—such as a data feed being delayed or a schema changing unexpectedly—before it has a downstream impact on the business, a key trend for ensuring the reliability of modern, complex data stacks.

Finally, there is a strong trend towards the "democratization" of data quality. In the past, data quality was seen as a highly technical task that was owned by a central IT or data governance team. The trend now is to make data quality a shared responsibility and to empower the business users who are the true "owners" of the data to manage its quality themselves. This involves providing more user-friendly, "self-service" data quality tools that allow a business user in the marketing or finance department to easily define quality rules and to monitor the health of the data that is most important to them. This shift towards a more collaborative and federated approach to data governance is a key trend for creating a true, enterprise-wide culture of data quality.

Explore Our Latest Trending Reports:

Online Trading Platform Market